Caldwell 6

Caldwell 6 - Cat’s Eye Nebula - Click here for full resolution

The Cat's Eye Nebula is a planetary nebula in the northern constellation of Draco, discovered by William Herschel on February 15, 1786. It was the first planetary nebula whose spectrum was investigated by the English amateur astronomer William Huggins, demonstrating that planetary nebulae were gaseous and not stellar in nature. Structurally, the object has had high-resolution images by the Hubble Space Telescope revealing knots, jets, bubbles and complex arcs, being illuminated by the central hot planetary nebula nucleus (PNN). It is a well-studied object that has been observed from radio to X-ray wavelengths.

source: Wikipedia

NGC/IC:

Other Names:

Object:

Constellation:

R.A.:

Dec:

Transit date:

Transit Alt:

NGC6543

Caldwell 6, Cat’s Eye Nebula

Planetary Emission Nebula

Draci

17h 58m 33.423s

+66° 37′ 59.52″

09 July

61º N

Conditions

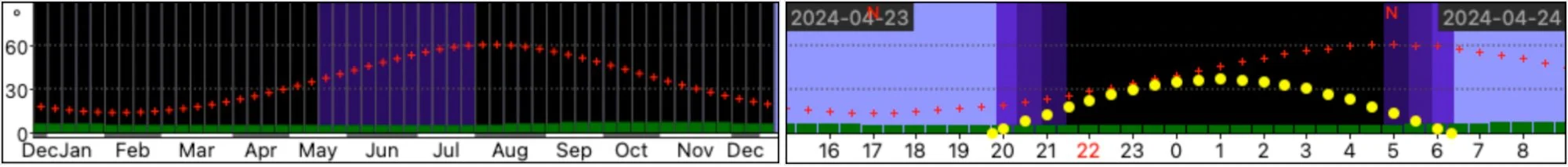

Caldwell 6 is visible all year around, but best visibility is during summer. Images were taken in April-may, so a bit early in the season for this target. But there was a lot of interference of the moon, so the need for a narrow-band target resulted in the cat’s eye nebula being imaged. Due to the moon’s presence, but also some cloudiness that was often present, the images were taken over seven different nights, each with about 2-5 hours of imaging time on the target.

Equipment

To image Caldwell 6, the standard rig at the remote observatory was used. This is built around a Planewave CDK-14 telescope on a 10Micron GM2000 mount, coupled to a Moravian C3-61000 Pro full-frame camera. The RoboTarget module in Voyager Advanced allowed automatic scheduling to find the optimum time slots for C6 relative to altitude and moon position.

Telescope

Mount

Camera

Filters

Guiding

Accessoires

Software

Planewave CDK14, Optec Gemini Rotating focuser

10Micron GM2000HPS, custom pier

Moravian C3-61000 Pro, cooled to -10 ºC

Chroma 2” RGB, Ha (3nm) and OIII (3nm) unmounted, Moravian filterwheel “L”, 7-position

Unguided

Compulab Tensor I-22, Windows 11, Dragonfly, Pegasus Ultimate Powerbox v2

Voyager Advanced, Viking, Mountwizzard4, Astroplanner, PixInsight 1.8.9-2

Imaging

Planetary nebulae are typically small in nature, and require a long focal length telescope to fill up a reasonable amount of the frame. Still the main image on top of this page shows a somewhat cropped version of the image. The full image can be seen here. Overall more than 23h of data has been collected, mainly using Ha and OIII narrowband filters. The RGB frames were mainly shot for the stars. The core of the nebula is super bright. A second set of Ha and OIII data was collected to capture that core using an exposure time of 180s. This was significantly shorter than the regular exposure time of 10 minutes.

Resolution

Focal length

Pixel size

Resolution

Field of View

Image center

9400 × 6200 px (58.3 MP)

2585 mm @ f/7.2

3.8 µm

0.30 arcsec/px

48' 7.2" x 32' 6.0"

RA: 17h 58m 33.000s

Dec: +66° 38’ 03.81””

Processing

All images were calibrated using Darks (50), Flats (25), registered and integrated using the WeightedBatchPreProcessing (WBPP) script in PixInsight.

An RGB stars only image was created by combining the Red, Green and Blue data, calibrating the colours with SPCC, deconvolve with BXT en extract the stars using StarXTerminator. Unfortunately, together with the stars, also some bright parts of the nebula came along. These were removed using the CloneStamp tool. Star stretching was performed using a new script, called ‘Star Stretch’, developed by Franklin Marek from SetiAstro. This script is designed to stretch stars from stars-only images. and ensures proper maintenance of the star colour during the process. There are two variables to adjust, the amount of stretch applied and the amount of colour to end up in the final image. I went with values slightly above the default values.

The narrowband data caused two big issues. The first one was the super bright nucleus of the nebula. In the regular 10min exposures, there was very little detail left with most parts of the core clipping. To expose for the nucleus, both a set of short (30s and 60s) luminance data was shot as well as a set of 180s narrowband data. In the end the impression was that most detail was present in the narrowband data, so I decided to use this. The regular way to combine exposures of different length to get an HDR image, is using the tool HDRComposition. Although this worked, it looked like a lot of noise was introduced. It seemed like the noise from the short exposure data (only 1h of data for each filter) had persisted into the final image, rather than the noise levels of the 10h of data for each filter from the 600s exposures. So in the end I decided to use the mask that was generated by the HDRComposition tool and substitute the clipping data from the 600s exposures with that of the 300s exposures using PixelMath.

Strange reflection-type donuts in both Ha and OIII images appear to be a diffraction phenomenon caused by the microlenses on the sensor. Under certain circumstances, such as with this super bright star, they can give reflections onto the coverglass of the sensor causing these patterns. A mathematical explanation can be found in this thread.

Removing ‘Diffraction donuts’ using an Adam Block technique

The second big problem was a weird phenomenon that looked like some kind of reflection in the imaging train. It was only visible in the narrowband data and was unrelated to flats. It clearly looked like something that had been caused by the very bright nucleus of the nebula. It turned out that this was a diffraction phenomenon that can occur within the sensor. The micro lenses of the sensor can act as a diffraction grid, with reflections bouncing off on either the inside of the cover glass or on the inside of the outer surface of the cover glass. A lengthy discussion on this topic, including some detailed mathematical modelling can be found on CloudyNights. So the good news is that this appears to be a regular optical phenomenon where there is not much that can be done about it, it just happens under certain circumstances. But that still does not answer the question how to get rid of it. A logical method would be to extract the stars and start using the clonestamp tool to stamp away the brighter areas bit by bit. Unfortunately this never gives a very even result, and given the extent of the issue here, a different technique was applied. This technique is described by Adam Block in one of his PixInsight Horizons lessons. The first step is to create a mask that only selects the ‘donut-like’ shapes. This can be done using the GAME script. For the effect to blend in nicely in the end, it makes sense to apply some convolution to the mask before applying it. The next step is to assess a cut-off value for the brightness level of the ‘Donut’ and the average value of the background in the immediate surroundings. Once those two values are known, PixelMath can be used to replace the brighter pixels in the donut by pixels that have the average background value. This is a bit of a trial and error with both selecting the right cut-off value and the replacement background value, but after a while the results get very close. Zoomed out, the uniform colour of those donuts may appear a bit darker, so make sure you are zoomed in deep to best assess the actual values. As a final step, noise is added into the donuts, in the exact same amount as the image has. Also this requires some experimentation with settings of the NoiseGeneration process. But in the end it is possible to completely remove the donuts without affecting anything. Overall my feeling is that this method is a bit more work than traditional clone stamping, but that the result is a bit better.

An Adam Block technique to remove 'donuts’ from the image. First use the GAME script to create a mask for those areas that are showing the donuts (left image). Then substitute the pixels with the brightness of the donut with pixels that have the average background value (middle image). As a final step add noise that is similar in pattern and amplitude than the noise of the rest of the image. The final result is a practically complete removal of the donuts, probably better than the standard clonestamp tool would have given.

With these two hurdles out of the way, image processing could be continued. An HOO image was created from the Ha and OIII data. This resulted in a very red background, probably due to the dominance of the Ha signal. Ultimately the plan was to create the right colours using the NarrowbandNormalization script from Bill Blanshan, but this works best on non-linear images. So as a quick fix BackGroundNeutralization was applied. Subsequent BXT and SXT resulted in a starless linear HOO image. I’ve tried some different methods of stretching, but felt that GHS gave the best results here.

Now it was time to apply the HDRMultiscaleTransform. This is a very powerful tool, and also one where it is difficult to predict what the outcome of it will be. So also here some trial and error. In the end I found some settings that gave the best balance between some level of detail in the core and still a naturally looking overall image.

Nebula after stretching (left). Using GHS, some of the detail in the nucleus could be secured, but still largely overexposed. After HDRMultiscaleTransform (middle). A lot more detail in the nucleus is visible and overall there is more focus on the structure of the overall nebula. The HDRMT process (right). This takes quite a bit of trial and error. These were the values applied for this image.

Now it was time to select the desired colours for the nebula. For that purpose the NarrowbandNormalization script from Bill Blanshan was used. This allows for different methods, brightness and boost options. I opted for Mode 1, which makes the OIII stand out as a fairly bright blue colour. The red from the Ha did not come through very much in general, so some selective boosting of the colour saturation was applied to the red colours. Some final tweaks with CurvesTransformation and noise removal using NXT completed the processing of the starless HOO image. Stars were put back in using PixelMath. And finally a little extra tweak with CurvesTransformation and overall adjustment of the background level to 0.07 completed the whole image.

There were several challenges in processing this image, of which the management of the wide range of brightness values appeared the toughest to address. And even after having processed all the steps, I’m still not sure that I got the maximum out of it. When looking for similar images on Astrobin, I came across some variations where the nucleus was imaged with really short exposures, such as 1 or 2 seconds. And then several hundreds of images combined. Perhaps for a future similar situation this might be an approach to follow, although I would imagine that would give its own challenges with regards to alignment etc. Anyone interested in the full amount of detail of the nucleus of this planetary nebula should check out the Hubble Telescope data. They can be downloaded and processed as well and will show massive amounts of detail.

Processing workflow (click to enlarge)

This image has been published on Astrobin and received Top Pick Nomination status.